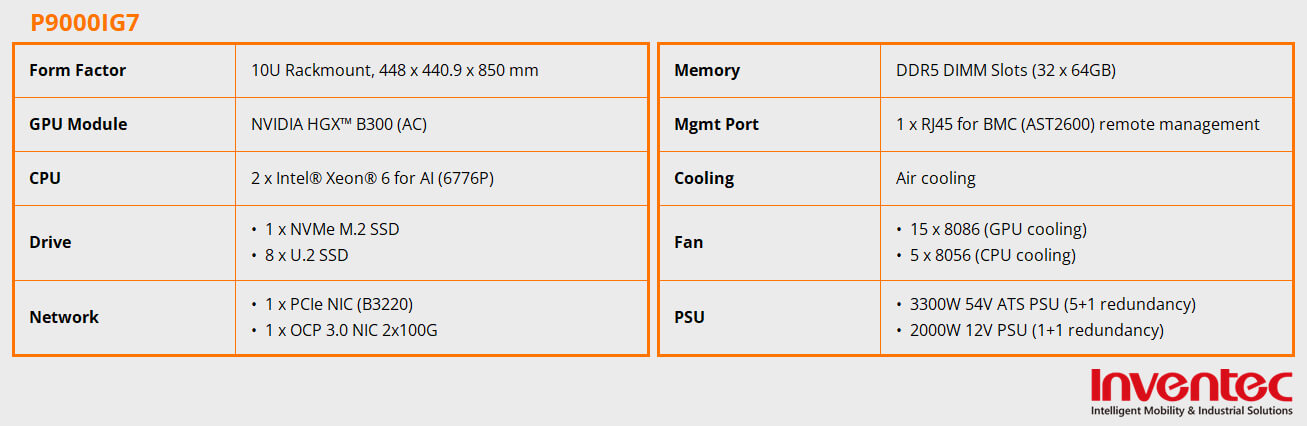

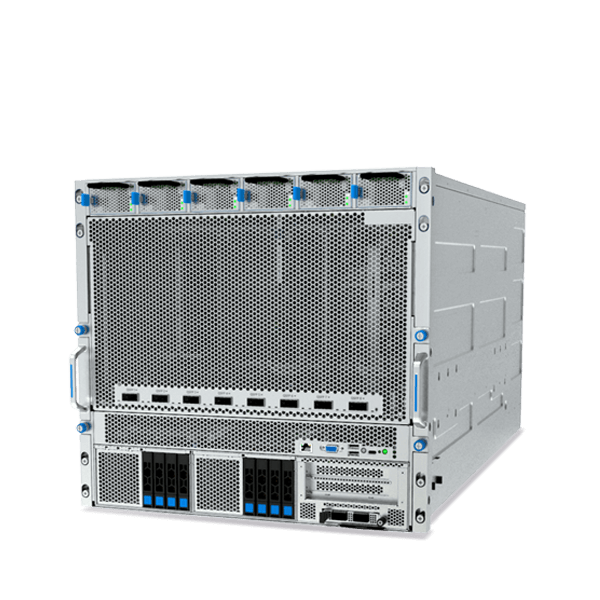

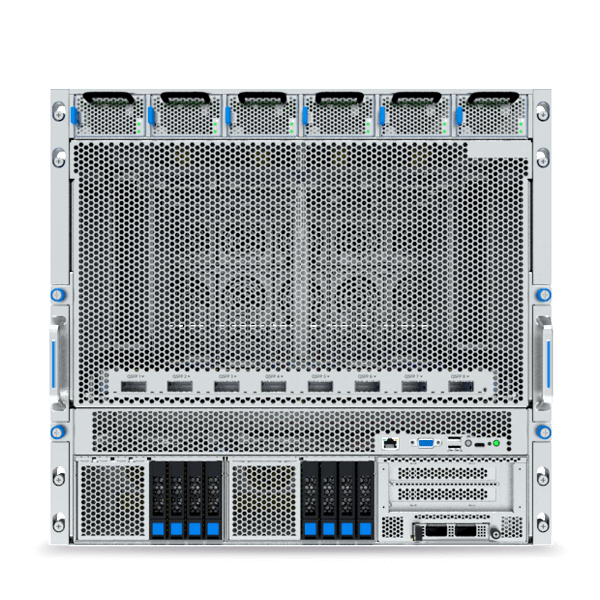

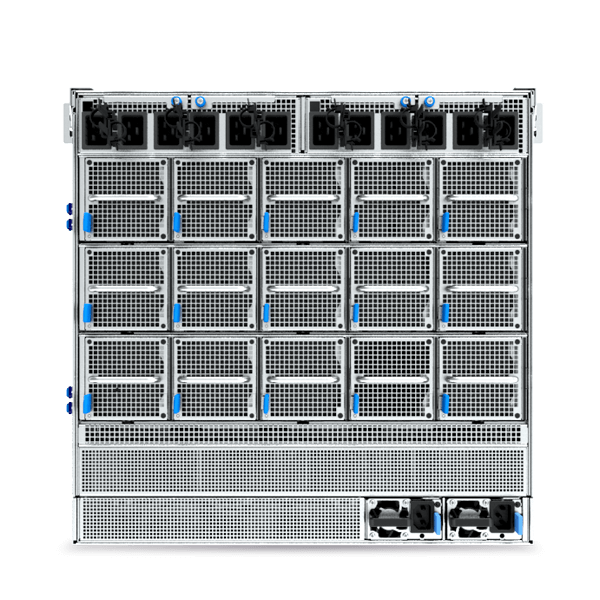

- 10U air-cooled AI server featuring NVIDIA HGX™ B300 GPUs.

- Dual Intel® Xeon® 6 processors.

- Next-generation artificial intelligence infrastructure computing capability.

- High-speed interconnects enable smooth AI execution.

- Designed to enhance system uptime and maintenance efficiency.

|

|

|

| UL | VCCI |

USA FCC |

|

|

|

| Certification Body |

Conformite Europeenne |

|

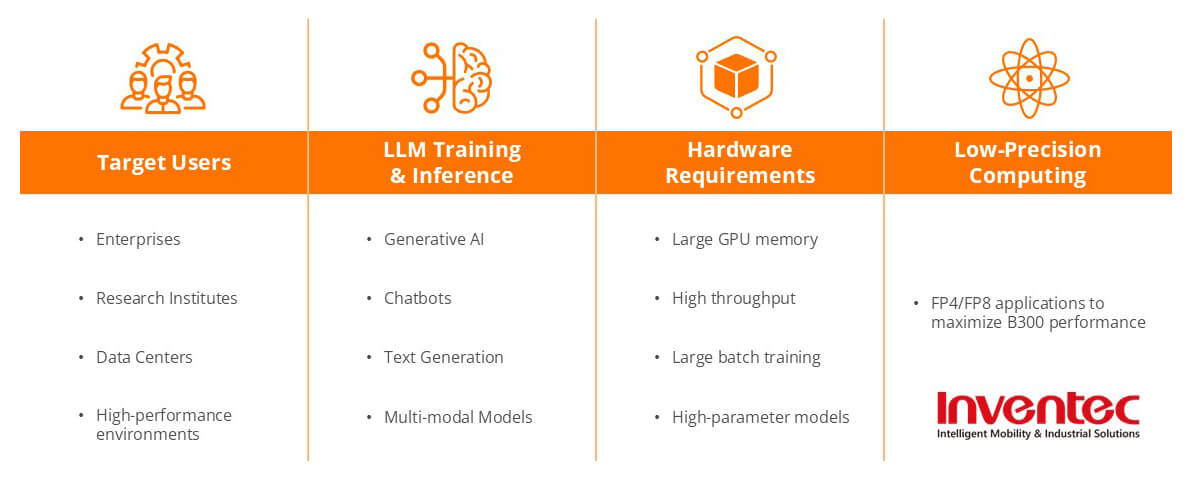

P9000IG7 (AC) is not only a high-performance server, but also a key cornerstone for next-generation intelligent infrastructure. From the outset, it was designed to address the rapidly growing demands of artificial intelligence and high-performance computing. By integrating the latest Intel® Xeon® 6 series processors (featuring both E-cores and P-cores) with the NVIDIA HGX™ B300 GPU platform, it delivers exceptional computing power to drive breakthroughs in machine learning, model training, and real-time analytics.

With its precisely engineered air-cooled thermal design, P9000IG7 achieves efficient heat dissipation and stable thermal management, providing a robust and future-ready foundation for high-intensity workloads.

HGX™ B300 GPU Features:

|

|

P9000IG7 (AC) adopts a dual-processor and eight-GPU collaborative architecture: dual Intel® Xeon® 6 processors working in conjunction with an HGX™ B300 eight-GPU system to unleash massive parallel computing capabilities while balancing high performance and energy efficiency. This architecture delivers exceptional flexibility and speed for a wide range of AI workloads, from training to inference. Housed in a 10U chassis, the system supports up to eight U.2 NVMe SSDs to ensure high-speed local access to large training datasets. The motherboard-integrated NVIDIA ConnectX®-8 SuperNIC™ network adapter effectively reduces latency and enhances throughput in distributed environments, tightly integrating compute, storage, and networking to fully support large-scale, high-performance AI applications. |

|

P9000IG7 (AC) utilizes DDR5 memory, delivering up to 6400 MT/s per channel and up to 5200 MT/s in dual-channel configurations, providing high bandwidth to the CPU and accelerating access to training data. Multi-GPU high-speed interconnect is achieved through the NVIDIA NVLink™ Bridge, ensuring efficient collaboration and high throughput for multi-GPU workloads. In addition, the system integrates an Inventec IMIS-designed retimer board to enhance signal integrity and accelerate data exchange between CPU and GPU modules, maintaining exceptional performance and stability in data-intensive environments. |

|

AI infrastructure must balance performance, stability, and maintenance efficiency. P9000IG7 (AC) features a modular architecture designed with serviceability at its core, incorporating tool-less maintenance mechanisms that allow rapid access to key components, reducing maintenance time and cost. The separated design of the main system and GPU modules enhances airflow zoning and thermal efficiency, ensuring stable performance and flexible maintenance in high-density computing environments. |